AI: What it is, where it's headed, and how Stevens is using it to make life healthier, safer and more secure

Deep learning. Machine learning. Neural networks. AI.

Everyone, it seems, is talking about artificial intelligence these days.

But what is it?

AI, a broad term variously describing either a grandiose goal or a toolbox of technologies and techniques, is poised to profoundly transform the ways we do business, monitor our health, commute to work and more.

In fact, that's already happening. AI is now a $20 billion global industry — and investment in it is projected to triple in just the next three years. It's gradually reshaping many aspects of our daily lives right now, right beneath our noses.

Siri and Echo use AI-type processes. So do Google Maps and Translate. So does Amazon's recommendation engine. IBM uses it to predict weather.

AI even helps your phone recognize you as, well, you — even on bad hair days.

The next wave of cars, hospitals and manufacturing plants will almost certainly incorporate AI technologies too.

A Swiss bus runs daily through its village and to Europe's largest waterfall without a driver, having intelligently "learned" to drive the route safely. An experimental deep learning-based system uses algorithms to read chest X-rays, find lung cancers (with 99 percent accuracy) and classify them — all in about 20 seconds. And AI has proven highly reliable, in tests, at diagnosing eye diseases from retinal scans and training itself to spot forming brain cancers in MRI images of the head.

"Systems that can analyze what's happening in hospitals and detect potential mistakes can save an enormous number of lives," noted leading AI thinker Oren Etzioni, director of the Allen Institute for Artificial Intelligence, during a 2017 talk on the Stevens campus.

Stevens is a growing player in this new move to harness AI for societal benefit, too.

The Stevens Institute for Artificial Intelligence (SIAI) launched last spring, with more than 50 faculty now affiliated, and provided a look under the hood at more than a dozen working projects during a special on-campus event in late November.

Prominent national voices in the AI space, including Etzioni, Google research director Peter Norvig and MIT robotics pioneer Daniela Rus, have visited Castle Point to share new insights and discuss the future of artificial intelligence.

And researchers across campus are deploying AI in disciplines ranging from medicine and security to emergency planning and sports science.

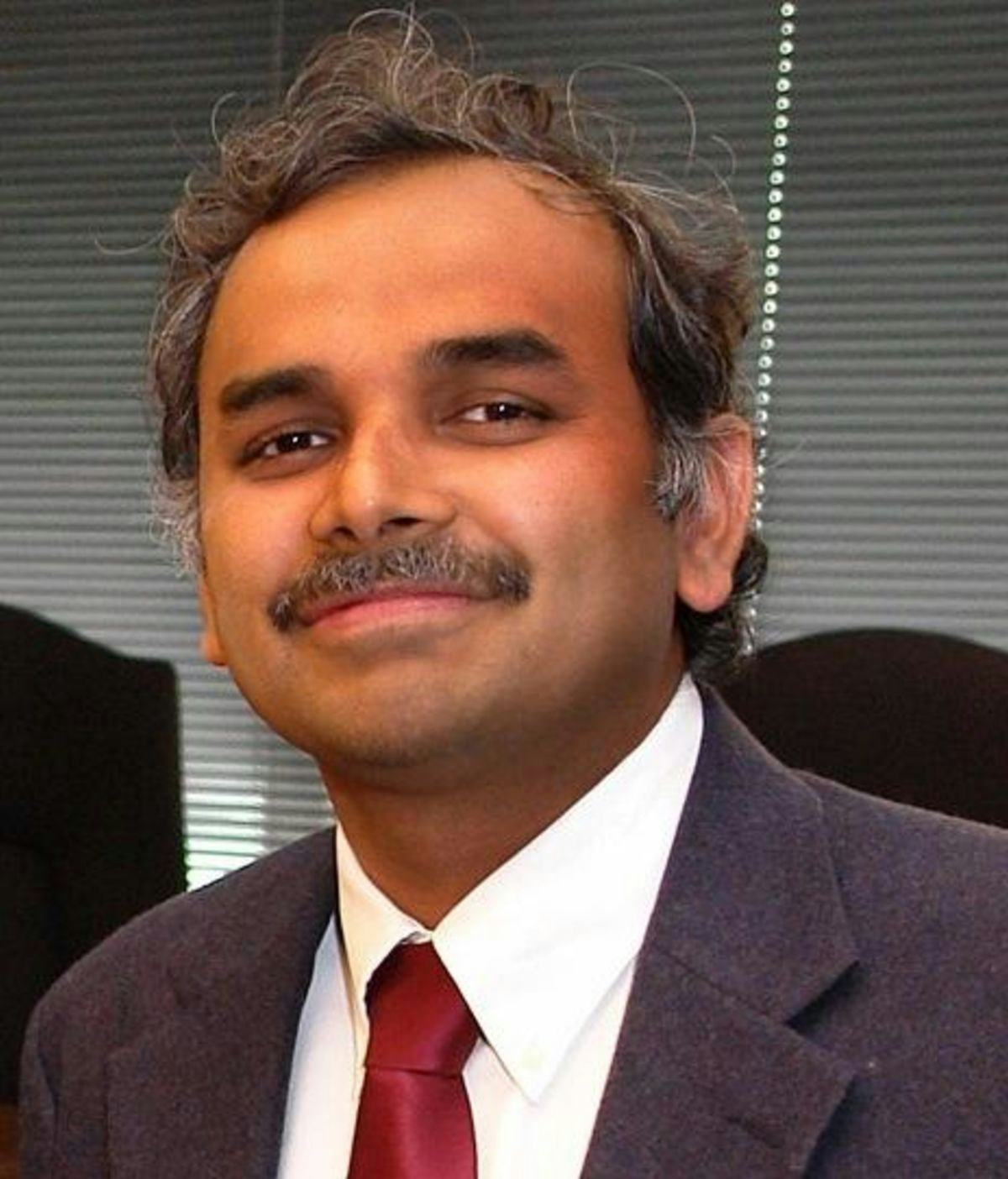

"Simply put, our vision is to drive AI research and application that solves some of those tough 'big' problems that have so far resisted solving," says electrical and computer engineering professor K.P. "Suba" Subbalakshmi, founding director of SIAI.

"There's a lot of impressive work in the field happening at Stevens right now," agrees Giuseppe Ateniese, a leading cybersecurity innovator and chair of Stevens' Department of Computer Science.

Leveraging AI to diagnose diseases, spot fraud

Some of the projects on campus grew organically from interests that predated AI's sudden renaissance.

AI is already a $20 billion global industry — and investment in it is projected to triple in just 3 years.

For more than a decade, Subbalakshmi and fellow Stevens professor Rajarathnam "Mouli" Chandramouli have leveraged AI to produce a series of remarkable innovations offering increasing power and accuracy with applications to everything from elder care to banking fraud to homeland security.

Their work began with a government official's offhand suggestion to pursue automated ways of identifying hidden messages concealed within normal conversations. From there, they became interested in deception detection and built an intelligent set of algorithms to detect lies in writing or speech. It performed well and became the basis of a startup.

Later, doctoral student Zongru (Doris) Shao Ph.D. '18 joined the team, which had begun developing applications to address broader societal challenges.

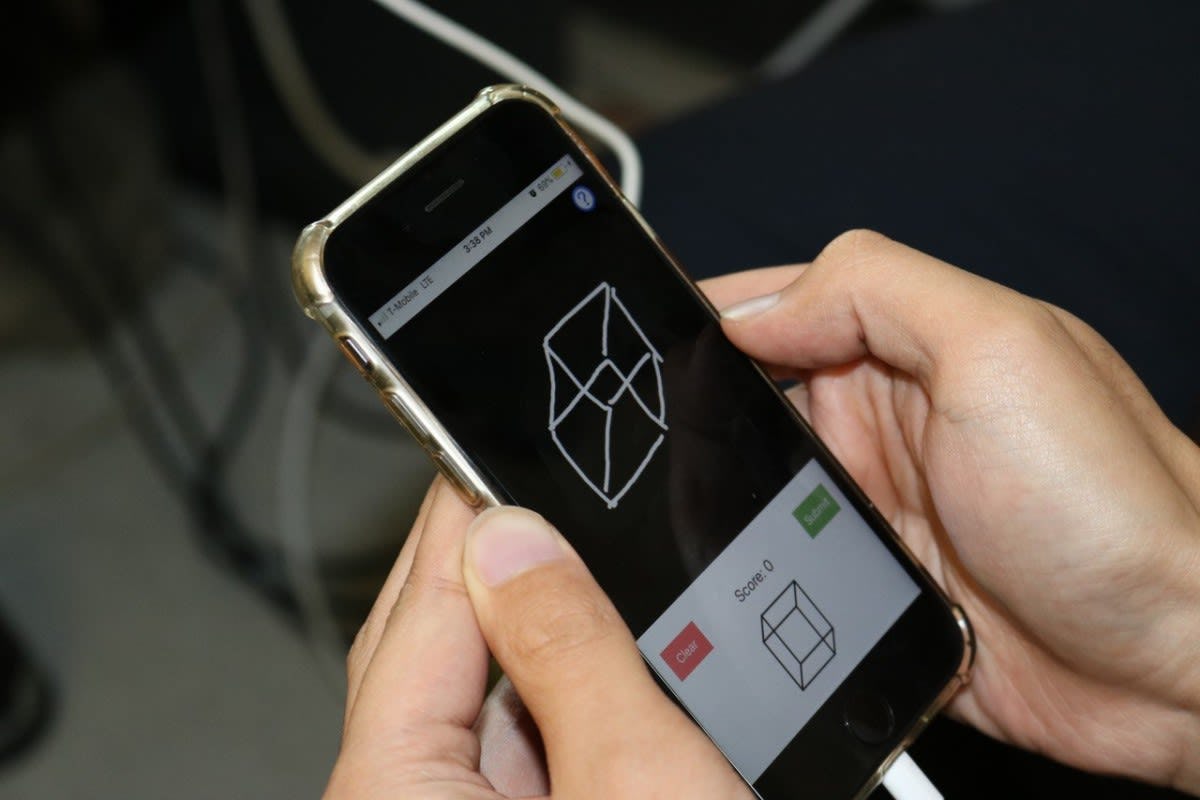

In one widely reported project, the trio trained software to scan written text and voice phone calls, then recognize the likely early symptoms of Alzheimer's disease, dementia or aphasia. More recently, Ph.D. student Harish Sista created an iPhone mobile application called CoCoA-Bot that tests various cognitive abilities. It's an effort to apply the team's algorithms to personal health concerns in a more user-friendly way.

Another tool, developed by the team in collaboration with longtime Stevens partner Accenture, uses AI to spot likely signs of insider trading or other financial fraud.

"The reason we have been investing in this is simple: because we truly believe in it," noted Sharad Sachdev, a managing director and analytics lead with Accenture.

To create that product, the trio fed large quantities of deceptive emails (including roughly 500,000 placed into the public record during the late-1990s Enron financial-crime trial) and routine communications into an analytic engine. The software quickly learned to pick out very occasional instances of criminal communication from a huge volume of innocuous conversation. And, in true deep-learning fashion, the software became better at guessing as time went by.

"Traditional machine-learning algorithms actually don't work that well for the sort of case where you have millions of routine emails and just a few suspicious ones," explains Subbalakshmi. "We had to develop a new one that would."

The software works by picking out unusual patterns in speech; the use of certain code words; and other signals. It also filters out legitimate sales chatter from suspicious material by looking at the context. A conversation about buying potatoes could just be about buying potatoes. But if that conversation happens suddenly, between traders in a brokerage, without any surrounding conversations about food shopping? The tool can flag that.

The trio is also testing and developing machine-learning tools that can authenticate voices. They're doing it because voices are increasingly used to lock and unlock devices, data, even bank accounts — and falsified voices pose an increasing threat.

To fight back, the researchers fed a large database of both human and computerized voice samples into an analytic system known as a convolutional neural network (CNN) that quickly learned to tell the difference.

"Our best-performing experimental algorithm is already able to distinguish between a real voice and a computer-generated, falsified version as often as 95 percent of the time," notes Shao.

Transforming cybersecurity, robotics, finance

Other AI-based projects are also active throughout the university, including some with important implications for privacy, safety and security.

Ateniese and his teams work on multiple cybersecurity projects leveraging AI and machine-learning techniques to probe networks and protocols. He led one team to a major breakthrough in password security, creating a machine learning-based system known as a GAN (generative adversarial network) to make faster, more intelligent guesses. In tests, the tool cracked passwords more successfully than the best current hacking tools.

"A machine can quickly learn all our human tendencies when making passwords, then teach itself to go beyond those rules," explains Ateniese. "It discovers hidden patterns we didn't even realize existed in our passwords. This can help us harden our own passwords, and understand how attackers get them."

Another group, headed by Ateniese and Stevens researcher Briland Hitaj, designed a never-before-seen GAN that quickly became scary-good at "stealing" private pictures from a locked phone without ever touching it — one of the first demonstrations of deep learning used for a nefarious purpose. They did it in order to understand the flip side of AI technology, create public awareness, and flag a specific weakness in machine-learning methods so that experts can begin building better protections into the learning models upon which AI depends.

"Whenever we see a new product or software come out with a grand claim of security, we immediately begin looking at it with a critical eye," says Hitaj. "If it leaks even one bit of information, we do not consider it safe nor secure."

There's a nucleus of healthcare-related AI research on the Stevens campus, as well.

Professor Samantha Kleinberg's Health and AI Lab (HAIL) develops models and methods aimed at improving health, including tools that model uncertainty, factor in missing data and otherwise enhance analysis of medical data. The research is applicable in treating stroke and diabetes patients, among others. Kleinberg also uses an ingenious setup to harvest chewing sounds and motions from sensors in wearable devices. Sophisticated models work to automatically measure the types and quantity of foods eaten — without having to stop and write them down. The system already monitors nutritional intake roughly as well as we can.

In another project, professor Negar Tavassolian develops algorithms and learning-based systems that can monitor your heartbeat in medical settings — even noisy or crowded ones — looking for abnormalities, or hunt for skin cancers by using AI and sophisticated new antennae to create higher-resolution snapshot images of your skin.

Finance hasn't been left out. Last November, two Stevens School of Business student teams shared top prize in a UBS-sponsored competition requiring participants to develop and present AI-driven technologies that optimize the selection of corporate wealth management branch sites.

The arts at Stevens integrate AI, as well. College of Arts & Letters (CAL) Dean Kelland Thomas harnesses AI to teach computers the tendencies of jazz masters and then improvise in real time with live human players; the research that may have applications to defense, computing and virtual assistant design. CAL professor Jeff Thompson created a machine learning-powered project on human-computer interactions.

Even traditionally technical fields such as engineering have seen AI swoop in and upgrade performance, and it's no different at Stevens.

Researchers across campus use machine learning to experimentally guide robots in a range of tasks, including underwater navigation (led by professor Brendan Englot) and evacuation of public spaces during emergencies (led by professor Yi Guo).

There's also a growing Stevens effort in the exploding field of computer vision — a subset of AI with applications to everything from transportation to elder care to counterterrorism. Projects in the realm include work by professor Xinchao Wang to analyze transit, medical center and sports videos, and research by professor Philippos Mordohai toward the development of improved wheelchairs.

"So much going on," sums up Subbalakshmi. "We have such breadth. It's really our strength."

What's next: promise, but cautions

What's next in AI? The sub-fields of image recognition, language processing, robotics and medical imaging will all heat up, say Stevens' experts.

But even as AI races ahead, some roadblocks — and caveats — still remain.

"Privacy and security are major concerns," says Ateniese. "As we and other researchers have already demonstrated, machine-learning models can be compromised, tricked and hacked."

Surveys show most consumers are still very wary of boarding AI-operated vehicles, in part because they fear hackers could create intentional crashes. (Those fears aren't completely unfounded, say security researchers.)

Another sticky issue: Because they chiefly learn from published words, images and videos, machine learning models don't learn perfectly. Instead, they reflect our own human weaknesses… because we built them. Researchers have begun discovering, for example, that certain biases can become baked right into algorithms if we're not careful.

An MIT Media Lab study recently discovered, for instance, that the AI used to build facial recognition systems understands white faces clearly and in detail, but experiences some confusion when looking at darker skin colors. A Microsoft-built "chatbot," trained to learn from the Twitter accounts it interacted with, rapidly degenerated into a racist, expletive-spouting mouth-off before the plug was swiftly pulled on the unfortunate experiment.

Other studies have demonstrated that AI learning systems tend to label a person in a kitchen as female, even if it's obvious to all of us that it's a man standing there cooking. Why? Because, for most of recorded history, movies and images largely perpetuated the stereotype that homemakers were solely women.

Predictive models can also become too specific to what they learn to give useful insights on new questions. Models trained for one task won't necessarily work as well for a similar problem, and a researcher might need to create a brand-new dataset from scratch — a huge task — or tweak and train the model all over again.

All things considered, some observers caution AI may be over-promoted as a cure-all.

"We haven't achieved true 'intelligence' with machine-learning methods yet," says Hitaj. "That's a very long way off. You can hand a two-year-old child a single photo of a dog, cat or car and it will recognize it as such for the rest of its life. We're nowhere near that level of human intelligence with our models."

Still, most scientists agree AI is here to stay, and that it could profoundly reshape business, medicine, logistics and more because — while it can't beat the human brain for intricate complexity — its tools can process and predict some kinds of things far, far faster than we humans ever could hope.

"It will permeate everything that we are doing, that's a fact," concludes Subbalakshmi. "But how it's going to permeate sensibly and how that will affect our lives is in our hands, not the machines'.”