These Smart Underwater Robots Will Soon Help Us Inspect Where We Don't Want To

Stevens Institute of Technology research team teaches robots and drones to make better maps on the fly, which could one day lead to safer ships, ports, harbors, buildings and skies

Robots have come to play important roles in defense and manufacture, but autonomous robots that learn in real time and apply that knowledge in the field remain a work in progress.

Now Stevens Institute of Technology is poised to play a major role on several fronts in this burgeoning field, including improving the ways in which robots can help perform dangerous and difficult tasks such as building, oil platform and ship inspection. And mechanical engineering professor Brendan Englot, an expert in the field of intelligent robotics with industry experience, is leading the university's effort.

Englot, a New York City native who obtained bachelor's, masters and doctoral degrees from MIT and taught part-time at Yale while working for United Technologies as a robotics expert, joined Stevens in 2014 to further explore his growing interest in developing underwater drones that might one day inspect ship hulls and monitor harbors.

It was a natural fit with the university's existing legacy in maritime engineering.

"One of the things that attracted me to Stevens," he confirms, "was learning the university had a longtime presence in the maritime domain. It seemed there would be many good opportunities here."

Indeed, Englot's work is already considered so important that he recently received a prestigious National Science Foundation CAREER Award of approximately $500,000 to pursue one specific aspect of the robot-navigation problem over the next several years.

From sea lions to smart maps

How are underwater inspections currently performed? Believe it or not, the best-available technology for rooting out bad-guy SCUBA divers and tiny mines attached to a boat is a dolphin or a sea lion.

Dolphins have outstanding sonar abilities that can even find mines buried in two feet of mud; sea lions don't use sonar but have outstanding eyesight, even in the muck, and have been trained to attach clamps to foreign objects by the U.S. Navy. Human divers can do the same tasks, but don't see well in murky water and can't locate hidden objects at all. Then there's the risk factor.

Underwater robots are probably the eventual answer. But teaching those robots to navigate waters, crafts and structures has proven difficult.

"It's hard to do robotics underwater," he explains. "There's lots of noise, the environment is attacking you all the time, pushing you around. Visibility is a problem. Sensing is different because you don't have access to the electromagnetic spectrum. Acoustic signals travel slowly, so there are often multiple hypotheses as to where the signals are coming from. The apertures of your sensors tend to be smaller, so you don't see as much with a given sample. And so on.

"It's a tough environment, but that's what makes the challenge interesting."

To address the challenge, Englot has formed a graduate-student research team in his lab. One of the key members is Kevin Doherty '17, a senior from Jackson, New Jersey who specializes in developing and refining algorithms for mapping. Doherty's project involves applying machine learning techniques to the problem of building 3D maps from limited information and making them progressively more descriptive on the fly.

"If we can successfully infer about areas we haven't seen, we can plan paths more intelligently based on what obstacles we predict will be there," says Doherty. "Humans do this seamlessly, and incorporate semantics: if I see a section of a wall, it's likely that adjacent to that wall is more of the wall. We're trying to teach the robots to make these inferences instantly as well."

Wiring a Jackal and its underwater cousin

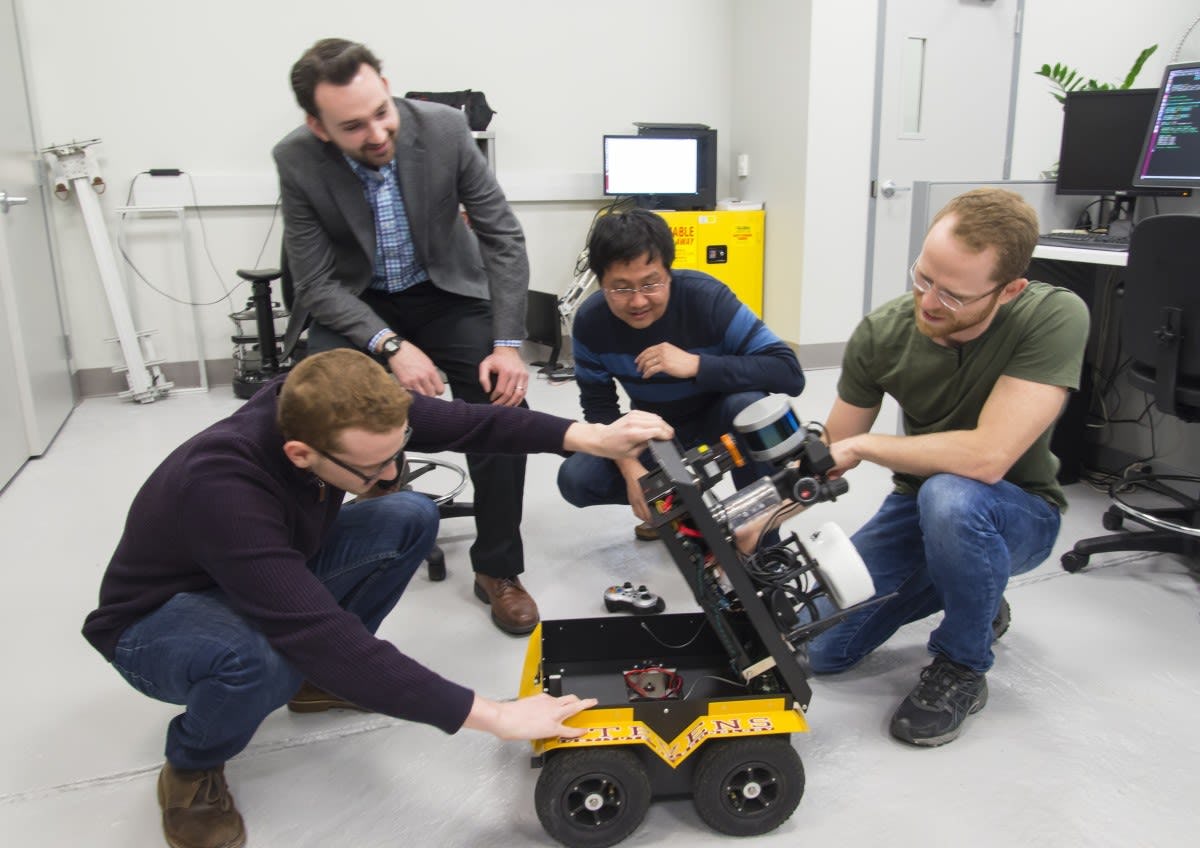

The main experimental subject at Stevens is a ground-scooting robot affectionately dubbed "The Jackal" — a craft not nearly as scary as it sounds. Basically a briefcase-sized beach buggy souped up with WiFi, transmitters, lasers and motion sensors, the Jackal uses 3D volumetric scanning LIDAR technology. By pointing a pair of lasers as it moves and continually receiving, calculating and storing the resulting data, it can make sophisticated maps of ground terrain.

Englot's team works to make those maps ever more accurate.

"Even our best current occupancy maps are very 'patchy' and have lots of holes," admits Englot. "Our hope is that with Kevin's algorithms, we can reason how to fill the holes, then produce occupancy maps that are more predictive in nature while still remaining accurate. This would allow robots to reason about their environments, even with imperfect data."

And in the deep blue, things get very imperfect quickly. Murky water, noise and other factors all can negatively affect the data collected. Yet that hasn't stopped the team from successfully testing their mapping tools on a bright yellow VideoRay remotely operated underwater craft in the historic Davidson Laboratory tow tank right on the Stevens campus — as well as in the Hudson River and in a Long Island marine-academy testing facility.

"The philosophy of our lab is literally throwing our robots into the deep end to deal with all the noise, the uncertainty and the disturbances that can possibly influence them and get our algorithms to work in those conditions," says Englot.

"Even if it's a long process, we think there's value to this. Some roboticists disagree and work in a swimming pool, with clean data. But we put the craft into the harshest environment and try to get it to work there."

During one test, the robot made four sweeps on a nautilus-like trail beneath a murky Hudson River pier. The resulting 3D map of the pier's pilings, collected over about two minutes, was surprisingly accurate.

"The raw product ends up being really enhanced when you apply our methods," notes Englot.

These Stevens algorithms are already attracting interest from fellow researchers, but it's not yet time for a commercial application. For now, basic research on the mapping problem — as well as related areas such as planning and learning — will continue.

"Robots are not yet ready to fly into the interiors of ships or piers and look around without supervision," he points out. "We don't have the situational awareness to enable them to do that safely yet.

"But this lab is trying to build the tools that will give robots that awareness soon, and I believe we are making excellent progress."