Award-winning Stevens innovation is like a 'fitbit for nutrition' that calculates what you're eating.

Twenty-nine million Americans suffer from diabetes. People with diabetes sometimes experience sudden drops or spikes in their blood sugar levels, which can be dangerous. One of the biggest difficulties in predicting those spikes or drops lies in meal tracking—accurately remembering exactly what we eat and drink.

Stevens Institute of Technology computer science professor Samantha Kleinberg and her team are trying to fix that by devising a way of automatically monitoring our meals using wearable technologies they found in the store.

Step 1: Can We Accurately Track Meals?

“It's annoying and difficult for people to monitor themselves,” Kleinberg says. “We do a bad job of estimating our food and drink intake."

Between apps, smartwatches and fitness trackers, people are collecting more data than ever about their own health. However, letting the people who use those devices find the patterns in all that data and translate them into actionable insights is the next step for the technology.

For people with diabetes, the key to improving their health is a holistic overview of that data with automated, continuous feedback. Kleinberg approaches that problem by leveraging the ability to store, retrieve and analyze all the data pulled in by those apps, smartwatches and fitness trackers.

Right now, there are multiple ways to do this. Paper or smartphone app food logs are the most commonly used, but they have shortcomings. For starters, they’re time-intensive to use. Second, they're only used for short-term data, making prediction of long-term patterns difficult. “That’s a limit of the tests, not the method,” Kleinberg points out. “If the patient sticks with it, or the study has additional funding, you could use it for longer.” Third, apps often match images of meals with a pre-defined database. Those meals seldom include diet-specific details like cooking something in butter that a person might need—but will often forget—to track.

None of those methods are easy or accurate enough for real-world use by people with diabetes.

Kleinberg’s solution is a step in the right direction: a combination of audio and motion sensors embedded in off-the-shelf smartwatches working together to capture holistic data.

Kleinberg and her students used earbuds, one smartwatch on each wrist and Google Glass headsets to monitor almost 72 hours worth of chewing and eating sounds and motions. That’s over 17,000 chews for normal, everyday meals.

Those devices worked well together. The earbud had two microphones, allowing the team to remove non-chewing noises. Smartwatches on each participant’s wrist tracked lifting and cutting motions. The Google Glass captured head motion.

“When possible, we used consumer devices to determine what performance can be achieved in real-world settings,” Kleinberg and her student researchers explained in a 2016 paper for the Pervasive Health conference. She explained further to Stevens, saying “We wanted to know what you could do with off-the-shelf smartwatches—something someone might already have.”

The next step? Using that setup to autonomously determine exactly what people ate.

Step 2: Can We Tell What You’re Eating?

“Determining when an individual is eating can be useful for tracking behavior and identifying patterns, but to create nutrition logs automatically or provide real-time feedback to people with chronic disease, we need to identify both what they are consuming and in what quantity.”

Those are the opening words of Kleinberg’s follow-up study, written by her and student researchers led by undergraduate Mark Mirtchouk and Christopher Merck in an award-winning UbiComp paper. In other words “we need to know not only that someone is eating, but what they are eating and how much they consume to develop truly automated dietary monitoring,” as they write later in the paper, with that emphasis.

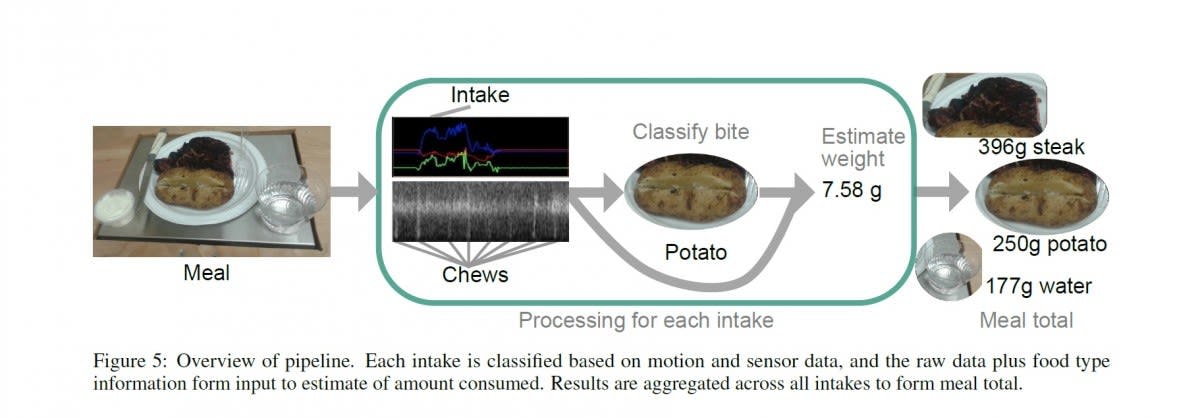

Here’s how they measured all of that:

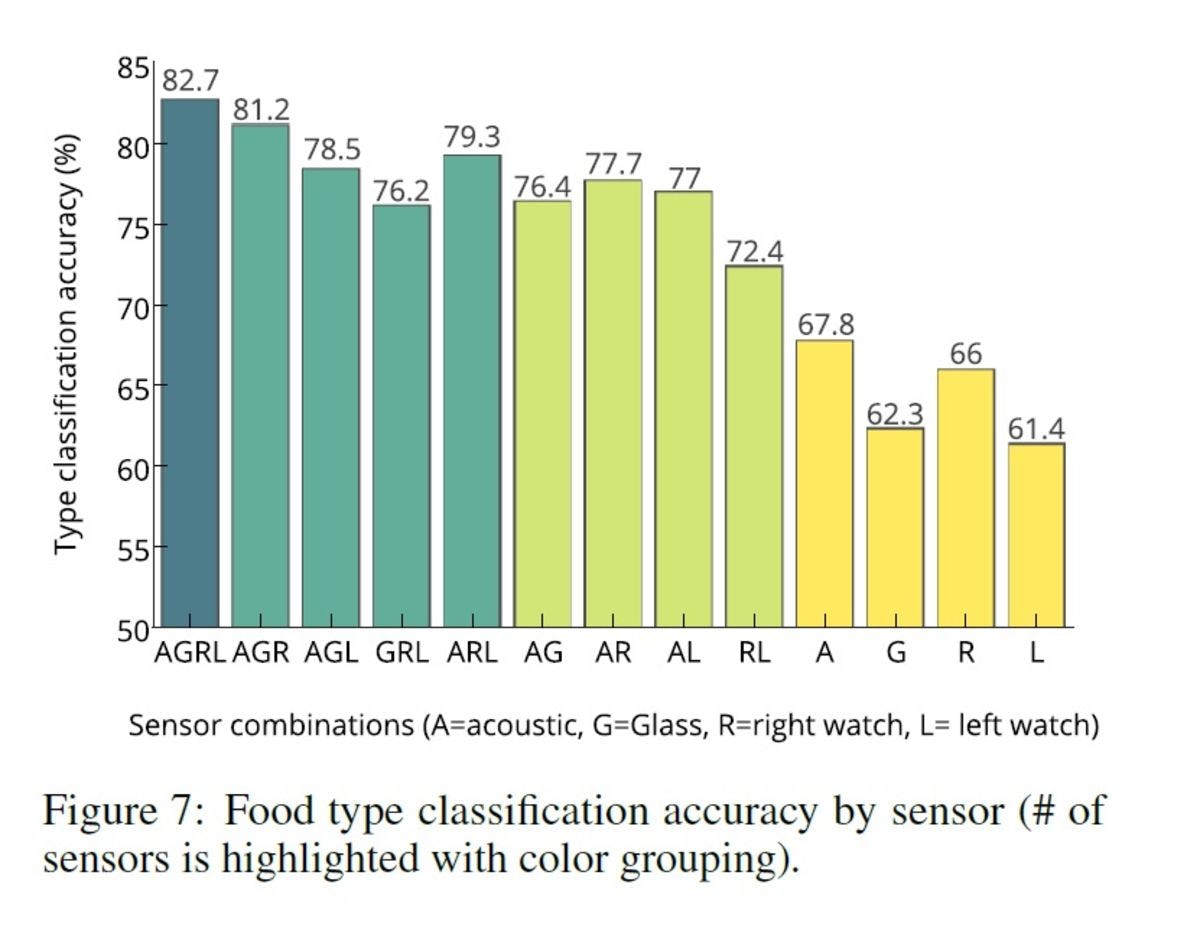

Using that setup, they were able to accurately identify food type and amount. Audio and motion sensors had an 83 percent detection accuracy in estimating food type consumed in each bite when combined; on its own, audio only had 68 percent accuracy. That’s greater accuracy than other studies using fewer food types. The UbiComp paper discusses one in particular, stating that it “achieved an accuracy of 80 percent using an audio sensor.”

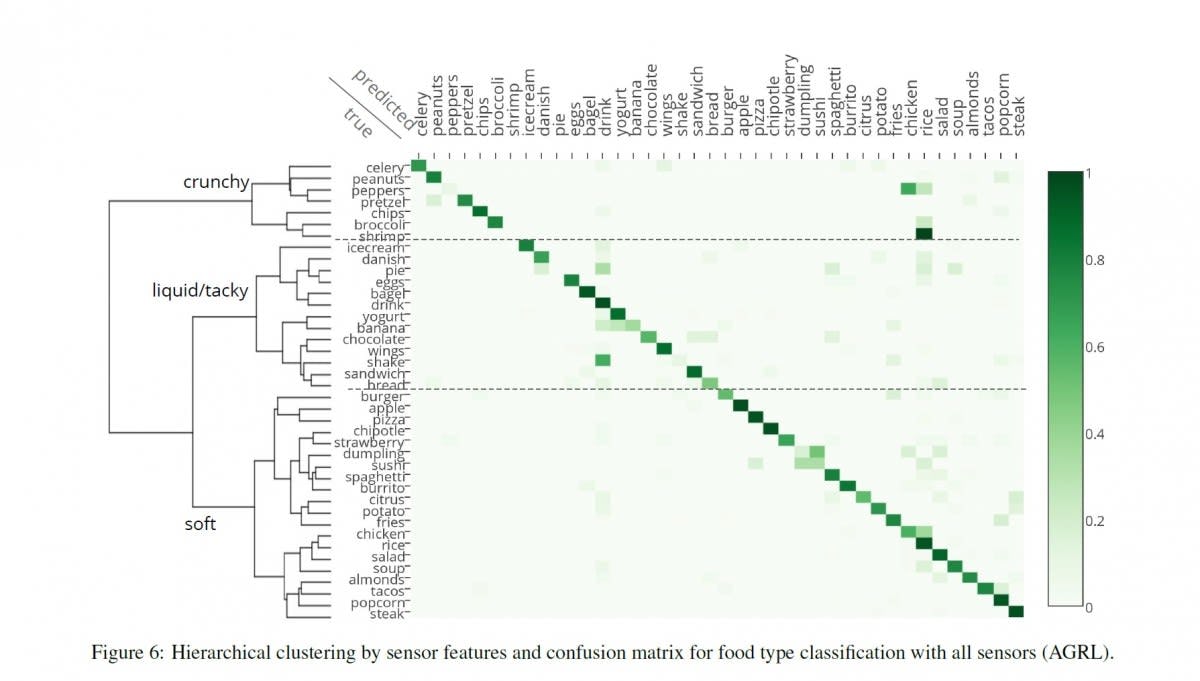

Kleinberg and her students suggest the increased efficacy of their setup may have been because, on their own, motion and audio data aren’t comprehensive. “Celery and chicken wings may sound alike if eaten by the same person,” they write, “crunchy foods like pretzels and nuts may have similar noises... motion alone may not be able to tell us if a person is eating yogurt or soup.” Together, audio and motion sensors provide more detailed, complementary information.

Once they knew the food type, the team was also able to accurately and automatically measure quantities of each food in a meal. Their measurement method was also able to reduce weight estimation errors for traditional food tracking measurements by more than 85 percent, “reduced from a 127.3 percent baseline error,” they write.

All of those results put this method on par with human capabilities. And all of those results make a good start toward the team’s goal of automated food detection.

"Once you know the food type, it’s easier to use combinations of audio and motion feedback to determine the amount of food eaten," Kleinberg explains. "We were able to estimate what and how much people ate with accuracy on par with humans."

Step 3: Can This Actually Help People With Diabetes?

Next, Kleinberg and her researchers are building on these results by tracking volunteers’ eating habits outside the lab. Potential future applications of the work could include “providing personalized food suggestions based on dietary goals and prior consumption,” she notes, such as pushing menu suggestions to a user’s smartphone when location data says they have entered a restaurant.

"Wouldn’t it be great if you could buy something like a fitbit for nutrition?,” Kleinberg said. “Like a fitbit, it's not going to be perfect, but it’s objective and gives you some insight into your behavioral patterns that can let you make more informed decisions."

Her work is moving us one step closer to making that device a reality.