Stevens Institute of Technology team demonstrates new attack that can guess and learn private information — without even seeing it

Stevens Institute of Technology researchers have created and demonstrated a powerful new artificial intelligence-powered style of attack that can crack into private data and learn it remarkably quickly. It appears, so far, to be immune to normal cyberdefenses.

And, irony of ironies, it works by exploiting and compromising methods only recently developed to protect our privacy.

"A decentralized learning model was proposed to address the issue of a central entity holding all of a private dataset," explains Stevens computer science chair Giuseppe Ateniese, lead investigator in a three-member team that created and tested the new attack. "It was believed data would be safer if no one party held or shared all of it at one time and the learning was done locally, without sharing the data.”

"But we are saying now that this is not safer at all. In fact, it is worse. Your data is more at risk."

By trying to make life easier, devices fall prey

Cyberattacks and breaches are in the news weekly. A recent investigation revealed Android devices track and archive users' locations even if tracking is turned off. Researchers have also demonstrated how a host of everyday devices — from pacemakers and credit-card readers to voting machines and hotel light switches — can be hacked with the right tools in the wrong hands. So can the software that makes your car stop and go. Or your airplane.

Bottom line: it's becoming increasingly difficult to keep data either secure or private.

In response, researchers developed AI methods known as federated, or collaborative, learning. The idea was that users would keep all their own data local and private, running learning models within silos and only sharing some details about the model (model parameters, basically) back to the group in order to mine insights from the aggregated knowledge.

"This new learning model, in theory, should be safe," notes Ateniese, who collaborated with Stevens visiting research scholar Briland Hitaj and former faculty member Fernando Perez-Cruz on the research.

But it's not, says the Stevens team.

"If tech companies such as Google or Apple embed federated learning models in their applications, you don’t have a choice," says Hitaj. "It's an essential part of the application."

In fact, products such as the popular GBoard mobile keyboard do contain federated learning models — in Gboard's case, to help intelligently guess typed words or hand drawings made while using the keyboard.

But a decentralized learning model like that one — installed with the good intention of helping optimize a phone's use, interacting with a larger group of users and a central service — is precisely where a hacker can strike, the new research shows.

And that attack can come from any direction.

How to trick a victim's phone

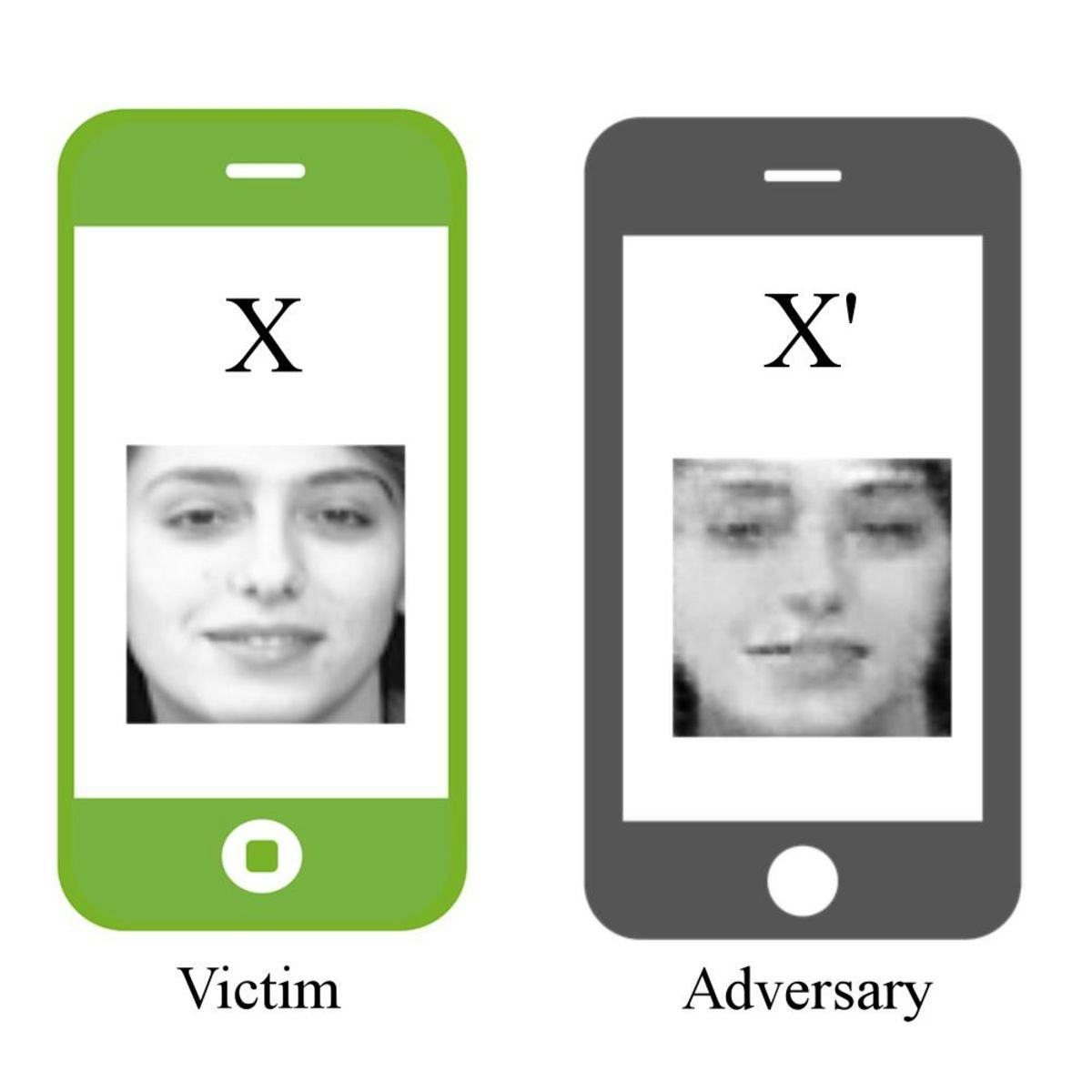

To probe weaknesses in decentralized-learning systems, the Stevens team devised an attack based on generative adversarial networks, or GANs: a deep-learning architecture comprised of two neural networks, working in tandem with each other to guess information related to private data.

The GAN method, devised by researchers in 2014 to generate original, life-like photos after studying real images first, turns out to be remarkably powerful. Two competing models toil at lightning speed, each on a specific task: one working feverishly to generate new items, one after another, that look something like an original set of samples while the second just as quickly compares and scores each attempt.

If your goal is to generate, say, fake portraits that are amazingly celebrity-like, it is a fun and effective party trick.

But if you're talking about cracking into private data in a phone, computer or hospital, a GAN's creative power could swiftly become something much more dangerous. That's why, for the first time, Stevens' team turned the GAN's power to an experimentally nefarious end.

Insider access is the key, says Ateniese.

Access, in this case, simply means participation in the federated learning process. Armed with a pass to get behind the velvet rope, any member of the group can then, in theory, do what the Stevens team did: use a GAN to nefariously craft some false model parameters and send them to a target device, closely inspecting responses from that device to the onslaught.

"We noticed that, in this sort of setup, we could actually influence the learning process of participants in such a way that they would release more information than needed," explains Hitaj.

How? As it runs, the learning model in the device obtains updated model parameters from other participants in the decentralized learning process. If those parameters are malicious (for instance, crafted with the help of a GAN), they actually disrupt the learning process of the remaining honest participants; the model can be tricked into releasing more parameter updates than are needed. And those parameters embed within them characteristics about data: general features about the shape of a single face in an individual picture on a phone, for instance.

Vague details about the shape of a face sound harmless. Except that, through a hail of repeated rebroadcasts and corrections of its guesses, those tiny details quickly add up, allowing a rogue GAN to get very close to guessing what that photo really looks like — even though it never touched the phone or viewed any of its pictures.

"Even scrambling or obfuscating the parameters of the model on the device is not enough to fend off this type of attack," Ateniese warns. "After designing and testing it, we basically concluded that the decentralized approach to learning — which is becoming increasingly popular — is actually fundamentally broken, and does not in fact protect us."

Creating new defenses for a previously unknown attack

Mounting the first-ever GAN-driven security attacks was instructive, but the next step will be even more critical, say the Stevens researchers. That will involve designing and testing robust defenses against the new attack method.

"In tests, our team was unable to devise effective countermeasures to the new attack," concludes Ateniese.

"That will certainly be a future area of inquiry of ours here at Stevens. No problem is unsolvable, but it will definitely take further research efforts to achieve this."