How Stevens Researchers Are Creating Better Maps for Robots, Self-Driving Cars

Working with MIT and other collaborators, a student-faculty team develops algorithms and A.I. to help unmanned ground robots and autonomous vehicles learn to navigate better and faster

Autonomous vehicles learn their surroundings on the fly in order to keep occupants safely traveling. Military drones need to create maps of unknown territories and do reconnaissance as quickly and efficiently as possible. Unmanned robots must move around in unfamiliar places as efficiently as possible, learning as they move, in order to perform inspections or other operations without becoming lost, disabled or drained of power.

But making maps of new places, in real time, isn't as simple as it might seem — even with powerful computers onboard.

Now a pair of Stevens Institute of Technology research projects, headed by a Ph.D. student and an award-winning faculty member, aim to help vehicles and systems learn to map new landscapes, streets and other spaces faster and more efficiently.

"This is exciting," says doctoral candidate Tixiao Shan, who is developing the research with Stevens mechanical engineering professor Brendan Englot. "We began with the problem of motion planning, but in the process of getting there developed these new and improved methods of mapping."

LeGO-LOAM: leaner, more accurate mapping

Working with a compact, tough-tired rolling robot dubbed "the Jackal," Shan and Englot have delved into two novel approaches to building better maps.

The first involves using (and improving) a common laser-based scanning technology known as LIDAR. Roving robots sporting LIDAR can't always stay connected to GPS as they catalogue features and geography — particularly when navigating indoors or in remote areas, where physical features such as mountains may block direct access to satellites in the sky.

"The technology works in those environments, but it's not ideal," notes Shan.

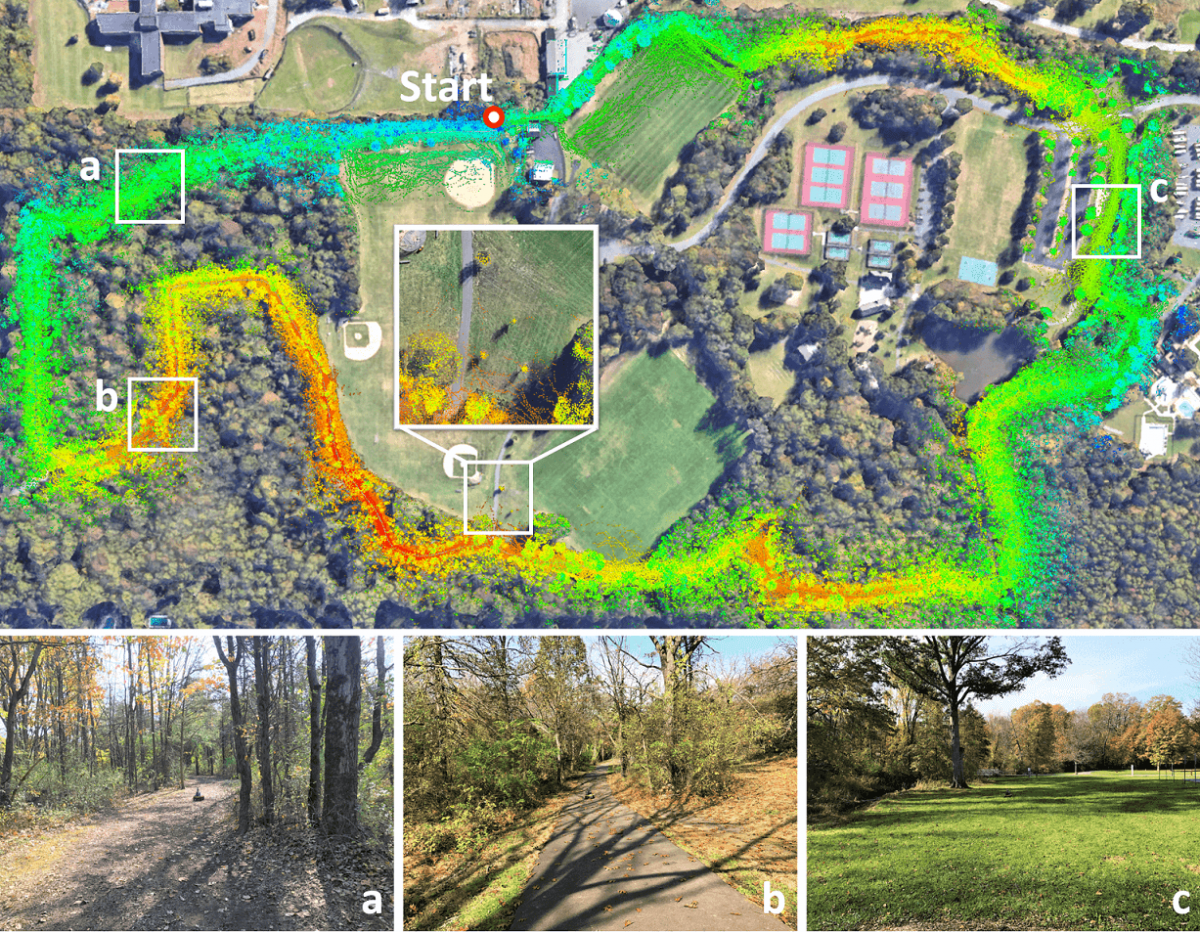

To cope with the challenge, the duo developed a system known as LeGO-LOAM (lightweight, ground-optimized LIDAR odometry and mapping) consisting of an unmanned ground vehicle with a small but sophisticated scanner mounted onboard.

The "lightweight" in the name actually refers not to hardware, but to algorithms.

"Existing mapping methods and algorithms use a tremendous amount of computational resources," explains Shan. "What we have done here is, first, tailored the mathematical assumptions and calculations to the problem of ground mapping. That makes a big difference when you factor in the ground plane. Second, we've developed a much more 'minimalistic' algorithm that focuses only on the most useful information, the most useful data points."

"Together, this makes computation far more efficient — while also actually yielding better maps."

As the rolling robot collects more information from the LIDAR than it's capable of processing, the leaner Stevens algorithms distill incoming data down to its most useful features, which are then matched across consecutive scans to track motion very accurately.;

In side-by-side tests with another software standard using the same scan-matching technique to localize LIDAR-packing vehicles, the Stevens-developed LeGO-LOAM proved more accurate than current state-of-the-art LOAM tools. The system can map several miles of terrain with only very slight errors (less than one meter in either direction) — and it does so without any access to GPS or other conventional mapping tools or data.

"Once you incorporate GPS, ground-map data, roadway data and other information, this could become very powerful indeed as a mapping and navigation system," points out Englot. "That would be the logical next step."

MIT collaboration uses A.I. to improve maps even further

A mapping or navigation system is only as good as the data it collects and deploys, and sometimes weather, terrain or other actors make it difficult to acquire high-quality information. That's especially true when navigating uneven ground or going through indoor-outdoor transitions such as into or out of garages or over bridges and through tunnels.

"In these situations, good existing maps of all the spaces you want to navigate are often unavailable," notes Englot.

That's why, more recently, Shan and Englot have added artificial intelligence to the mix, enabling even higher-resolution, more powerful, more accurate maps to be drawn by vehicles as they move — again, even when there's little to no preexisting map data to draw on.

In collaboration with MIT researcher and Stevens alumnus Kevin Doherty '17 — previously an undergraduate researcher in Englot’s lab — and supported by the National Science Foundation, the team is exploring better methods of mapping and rendering walls, barriers, roadside curbs and other hard boundaries.

"Those boundaries and barriers turn out to be a crucial component of the A.I. software built into self-driving vehicles," explains Englot. "Even when the scanner can't see everything, we have found a way to infer elevation and traversability at a high resolution by applying learning algorithms to the problem."

The heart of the new algorithm is a statistical technique known as the Bayesian generalized kernel (BGK) method, which makes inferences about data and guesses at missing information.

While BGK has been around for about a decade, Shan and Englot are the first known researchers to apply it to ground-mapping applications. Their specialized algorithms begin by creating a cloud of points from incoming scanning data, drawing grid cells containing height data and calculating inferred elevations while drawing an entire rudimentary terrain map: a first-draft map, if you will.

Next the algorithms return to the map for a second pass, using the inferred elevations to accurately estimate terrain traversability at certain points on the map. Why only at certain points? In order to save time and computational resources — a common A.I. shortcut.

Finally the gaps between those points are intelligently guessed at and filled in using the BGK method, which relies upon the most likely probabilities learned from its entire history of scans.

"By feeding quantities of existing map data into a machine learning model, we have built an algorithm that makes very good assumptions about what isn't seen, based on what the robot or vehicle does see," summarizes Shan, who has created an interesting video demonstrating the method's map-drawing prowess.

Self-driving snowplows, neural nets on tap next

What's next? Researchers at Carnegie Mellon University and the University of Minnesota, among others, have already built upon the team's research projects to work toward such applications as self-driving snowplows.

Shan and Englot have also begun building deep learning into their systems, developing complex neural networks that can learn and predict patterns in terrain data even more quickly and accurately.

"We're already making some progress there," concludes Shan, who plans to continue postdoctoral research in academia in the field once his Stevens Ph.D. is completed.

"And we are confident this trio of technologies will become quite useful for ground mapping for a wide variety of situations and applications, including for self-driving vehicles."