Driving Change: Supported by NIH & Google, Stevens Uses AI to Improve Mobility, AR

Computer vision expert Philippos Mordohai improves augmented reality, self-driving wheelchairs

The AR (augmented reality) technology that fuels interactive games like Pokemon Go requires powerful, fast-moving software that can rapidly, continuously create complex, changing three-dimensional scenes, on the fly — often with only the computing power of a single phone or tablet device to tap.

"That's the problem," explains Stevens computer science professor Philippos Mordohai, an expert in the growing artificial intelligence field known as computer vision. "These things ultimately need to be able to run on a phone, tablet or mobility-assistance device."

To do it, Mordohai — a faculty member since 2008, who previously worked on 3D reconstruction projects funded by the Defense Advanced Research Projects Agency (DARPA) — is working on several more interesting applications.

Augmenting reality by rendering scenes in motion

Now, supported by a recent unrestricted gift from Google, where he was a visiting research scholar during the spring and summer of 2018, Mordohai is working on developing algorithms that will support and improve AR applications by continuously reconstructing a scene in 3D from multiple images.

Mordohai leverages ARCore, Google's proprietary AR development software, to estimate a user's location and orientation moment-to-moment by sampling images from the camera.

Then, using an algorithmic technique known as SLIC (simple linear iterative clustering), he simplifies complex images and scenes into so-called "superpixels" — essentially, larger chunks that still serve as accurate representations of a scene, something like mosaic tiles do.

Next he trains the algorithms to pick out and "fit" information about surrounding planes into those superpixels. The processes begin by sampling horizontal planes of the images being acquired, since objects (usually) rest on floors, streets and other horizontal support surfaces, before moving on to inspect vertical, diagonal and other planes.

"When you have virtual characters interacting with a scene, they usually act like real people: they step on the ground, go behind things without passing through them, and so forth," Mordohai explains. "So this requires some precision. You don' t want a character appearing to stand a few inches below the floor, for example. You have to get the depth estimates and the relationships in the scene right."

Better images, more efficiency

AR applications are complex. When they're running on a phone, quickly and in real time, computations and energy use can quickly get out of hand.

That's one of the key contributions Mordohai hopes to make: more efficient processes that speed delivery of AR and also trim energy use.

"Even if AR software is powerful enough to run on a phone, there are so many computations involved that you quickly drain the battery," he explains. "Our method means taking up just enough resources to provide an answer, the current best answer or estimate, while still continuing to work on the scene problem in the background."

In the case of a virtual character traveling across a scene, for instance, Mordohai is working to develop algorithms that focus computation and detailed scene-drawing on the parts of a scene where the character is immediately located and the viewer is looking. The rest of the scene can be set aside, very temporarily, in memory.

That's a sharp contrast with the way most 3D scene-modeling algorithms currently work: They give a more complete, detailed scene reconstruction, but they also take longer and require more power.

"We want to progressively improve the estimate — the scene being reconstructed and the virtual elements rendered — as you move the phone around," he says. "The goal is to have something that will run on the next generation of phones."

Wheelchairs that better assist drivers

For another project, funded by the National Institutes of Health beginning in 2014, Mordohai's team has been developing algorithms that train powered wheelchairs to navigate and operate more efficiently, even to the point of personalizing the experience for each wheelchair user.

"In particular, we focused on those with some sort of hand disability," explains Mordohai, who collaborated with doctoral candidate Mohammed Kutbi and postdoctoral researcher Yizhe Chang among others on the project. "More than 2 million American adults use wheelchairs, and the conventional joysticks can make driving these chairs very difficult for some users."

Before deploying AI, the team began by addressing the ergonomic challenges of engineering an easier-to-use wheelchair. Various alternative control methods for motorized wheelchairs have been tested, including chin controls and back-of-head switches, but most of those systems still place a strain on the neck or other muscles, tendons and bones.

"In our cases, we developed a system in which the motions are very small, there are no mechanical devices, and there's no resistance," notes Mordohai.

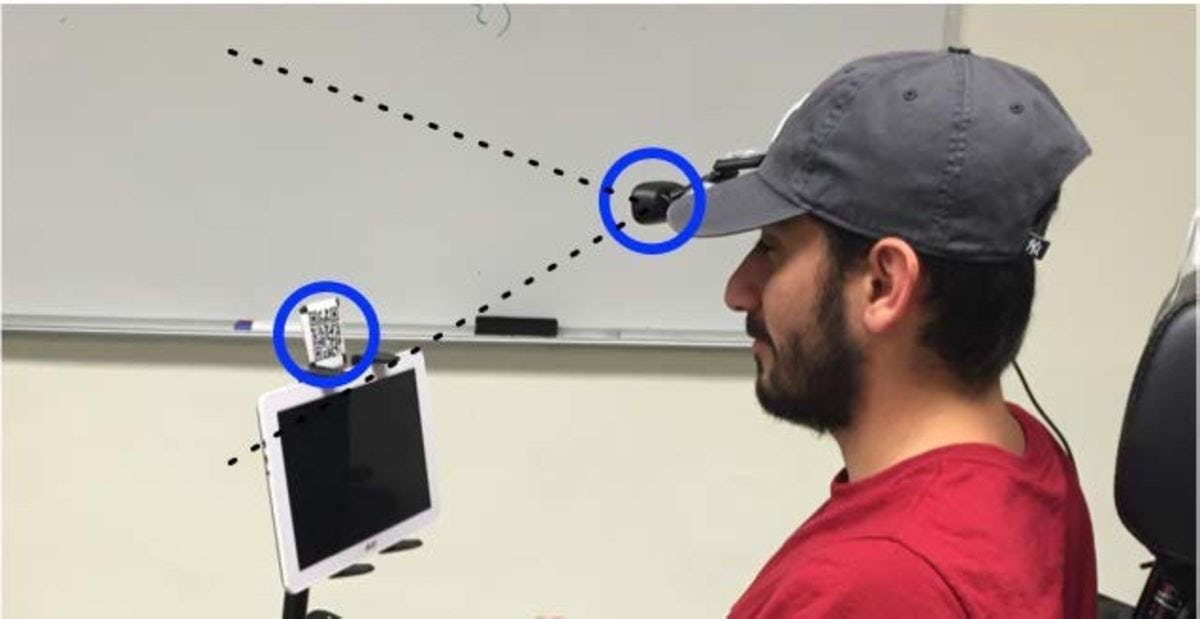

The Stevens system uses a Microsoft Kinect sensor mounted on the wheelchair and a small web-camera attached to a hat worn by the user to sense the environment. A tablet connected to the wheelchair's armrest provides the occupant with a navigational map that updates in real time to assist in wayfinding.

Next, Mordohai's team set to work devising algorithms that could discern and learn individual user patterns and preferences, such as a person's typical separation distance from walls and other people.

As an occupant spent more time driving the chair, the algorithms became better at learning those preferences and using them to subtly anticipate and correct motions of the moving wheelchair. The team then successfully demonstrated an approach that helps select a user's optimal wheelchair path through a landscape, minimizing the occupant's number of physical interactions with the hardware controls.

Although the NIH-supported project has concluded, the work may continue in further iterations.

"It's possible future students will continue to work to refine this technology," Mordohai concludes. "You never know when a new industry partner might surface. In any case, valuable information has been gained about human-robot interactions, and this may be useful for all researchers developing new assistance technologies in the future."