Teaching A.I. to See: How Computer Vision Is Reshaping Medicine, Security, YouTube and the NBA

Newly developed technologies track, locate and analyze everything from stem cells and surveillance footage to LeBron's post-up game

It was inevitable, in a digital era, that AI would eventually come to the NBA.

And the leading-edge technology it uses has a close Stevens connection.

The nuanced stats and video game-style visualizations created by the league since 2016 are required viewing for NBA coaches. Top-secret mixes of algorithms track, slice, dice and analyze every last move — every pick, roll, pass, shot, fast break, dunk and turnover — in every game, scanning and analyzing live footage from arena cameras and processing it to help coaches make sense of strengths, weakness, tendencies and matchups.

Those tools are largely built on technology Stevens computer science researcher Xinchao Wang originally helped design and prototype.

"Basically, we taught software to follow the trajectories, at every instant, of all the individual players on the court as well as the ball," explains Wang, who performed the work while at the Swiss government-backed institution ETH Zurich. "And that prototype ended up as the basis of the system used in the NBA today."

Wang is one of a cluster of Stevens researchers working to rapidly expand the reach of this fascinating technology, known as "computer vision," which uses AI-driven processing operations and algorithms to recognize visual features such as people, crowds, balls in flight or sudden movements that human observers may have missed due to the limits of our eyesight.

"There's an impressive body of computer vision work already developed here at Stevens," notes Stevens computer science chair Giuseppe Ateniese, who oversees much of the university's research in the white-hot field. "And it's only growing."

Tracking videos by crunching numbers

Boiled down to its essence, computer vision technology harnesses artificial intelligence methods to track and locate objects in space and over time, something people do automatically every moment.

Sound easy? It is and it isn't.

Multiple cameras might first need to be arrayed to capture an event or surveillance scene from varying angles in order to obtain more data. Regardless of how the video footage is captured and collected, however, the resulting frames must each be isolated, converted to data, combined, analyzed again, and output as probabilities.

That’s where machine-learning scientists enter.

"It's basically giving the computer a memory of how an object or agent moves around from instant to instant," says Enrique Dunn, another Stevens researcher in the field.

"I use deep-learning methods to track objects in motion," adds Wang. "They could be anything, but in this case the 'objects' were the ten basketball players and the ball."

For the NBA project, Wang's Swiss team set up mathematical operations that first define the relevant spaces — the basketball court, the air above the court — as a series of grids or cells. That's called an occupancy map. He also created processes to describe each individual player as a digital image. Bear in mind that every digital image is, at bottom, nothing more than a bunch of numbers — a complicated, nuanced, matrix, yes, but just math nonetheless.

Then Wang devised algorithms that calculated and recalculated the probabilities each cell in the grid is either empty or contains something from one moment to the next.

By tracking the changes in these complexes of numbers — each representing a characteristic of a frame of a live video — across the physical grid and over split-seconds of time, Wang's new system quickly learned to figured out who was who, who was moving where and how fast, and who was touching, passing or shooting the ball at what angle and with what force.

"Previously, this was always done by hand, which is amazing," says Wang. "People actually had to sit there and watch a live game, or go back and watch every action of an entire game on tape, and write down everything that happened, every single movement and pass and shot. That became the data that could be analyzed by coaches. It was a lot of work."

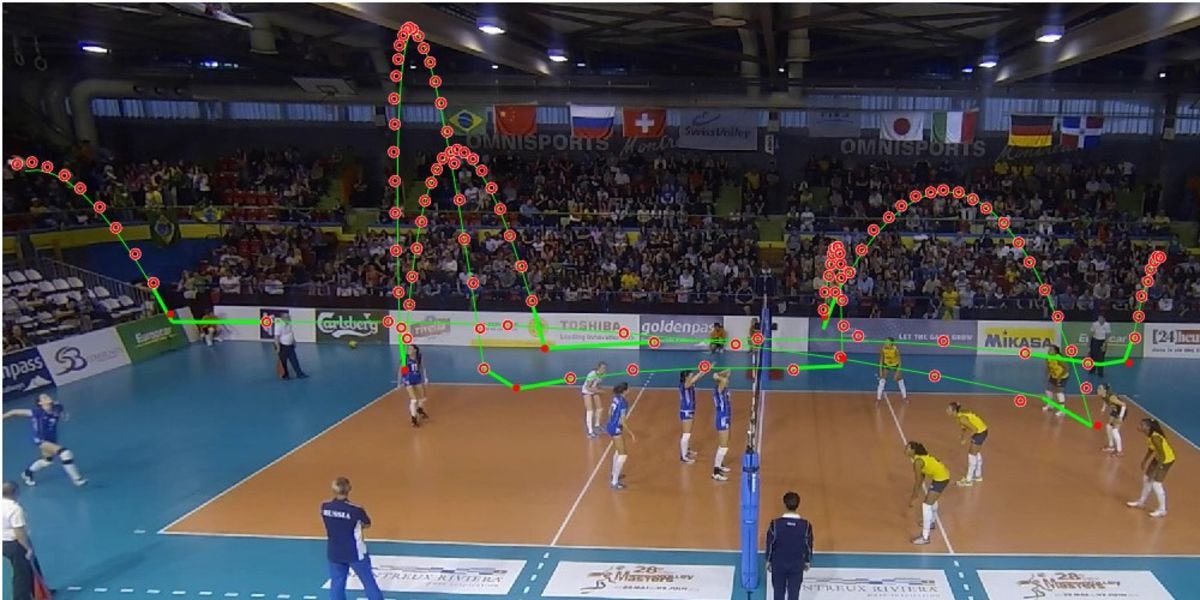

Wang's software instantly streamlined the process, and removed human observational bias as a bonus. In additional tests processing and analyzing real-time video of volleyball players, he has since developed additional methods that can track and summarize action and game play more accurately… and much, much faster.

"There are only a few seconds' delay between live action and a good tracking report and visualization by this system," he says. "That's pretty good."

More efficient hospital staffing, safer public spaces

The usefulness of the new technology doesn’t end at an NBA tipoff, though.

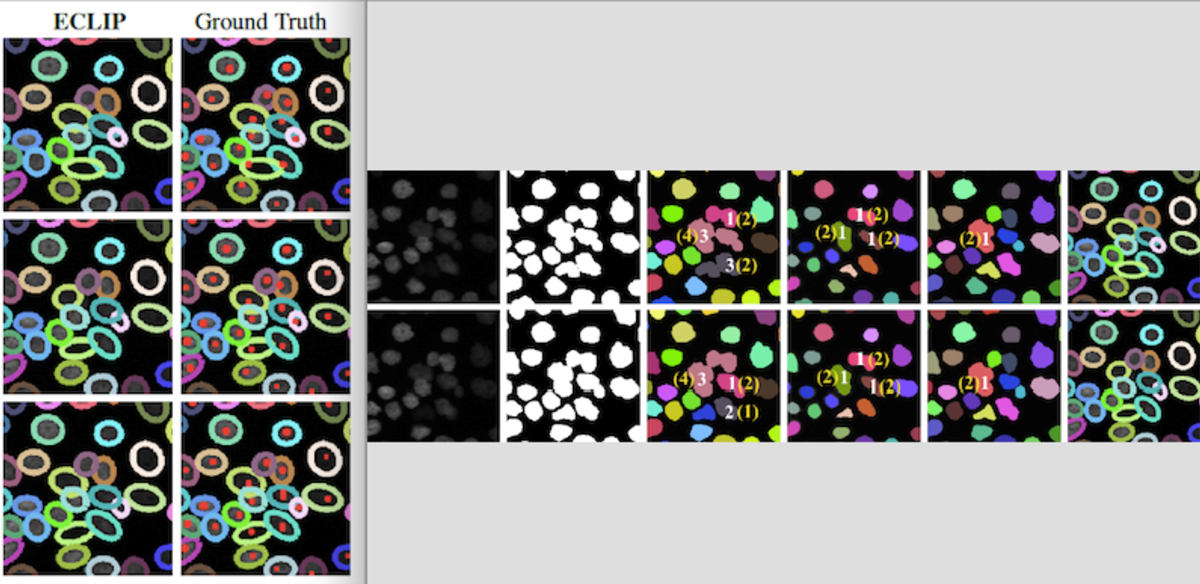

Wang has used similar machine-learning methods to track processes as diverse as the efficiency of operating-room procedures – by analyzing videos made, with permission, in a German medical center; the movement of in vitro human stem cells magnified and photographed using high-powered microscopes; and the motion of people and objects at transit stations and garages, with an eye toward security applications.

"We already see many potential uses for this technology," notes Wang, who has collaborated with experts and universities worldwide. "In the medical case, we would like to understand work flow better, and try to make predictions and optimize operations. For security, once you have accumulated tracking data, we can teach the machine to identify potentially suspicious poses or actions."

The AI, he points out, could be programmed to run and analyze sample videos of known criminal and terrorist acts and threatening situations, as well as footage of harmless crowds and individuals to learn the mathematical patterns of bad or suspicious acts: a person depositing a backpack-sized object in a station and walking away from it slowly, say, or an individual slowly circling a parked car for a long time.

Then, when those processes spot the same patterns in live video, they could be tuned to flag it automatically, in real time.

"This all can be used to help authorities plan and react more quickly to security threats," says Wang.

In addition to sports, transit and workplace video analysis, Wang is also working on an AI-powered innovation that can sharpen the quality of videos such as those on YouTube or those captured by security camera into super-high resolution — and another that intelligently corrects distortions in images taken by cameras with fisheye-type lenses.

"There's always another area to explore," he says. "There are always new problems and challenges."

Tracking in 3D: the next frontier

What's the next move in the computer vision game? Taking it up a notch to track in true 3D.

Wang recently joined forces with fellow Stevens computer scientist Dunn in a more comprehensive effort to enhance computer vision that can track objects and scenes in greater detail.

Dunn's own expertise lies in image-based 3D modeling, which reconstructs virtual environmental representations from a set of images observing a common scene.

He does it by developing algorithms to pick out and analyze common features in large sets of images, then piecing the scenes together in order to estimate their geometry. In one project, he was able to recreate hundreds of famous world landmarks in 3D — almost down to the inch — without ever seeing them. The system worked, in part, by harvesting and inspecting some 100 million crowd-sourced photos.

"The challenge of efficiently identifying clusters of images, by observing the same scene and relying only on the image content, had not been previously addressed at that scale in the context of image-based modeling," explains Dunn. "The commonality and the redundancy among all those observations is what allows you to infer their geometric relationships. And estimating those geometric relationships then allows you to build fully unsupervised modeling systems."

Now he hopes to combine his expertise with Wang's, creating new methods to better compensate for moving, shaking or low-quality cameras as well as conditions of lesser visibility.

Applications, the duo say, could include virtual and augmented reality, autonomous driving and aerial surveillance applications where objects are tracked online in live video feeds captured by moving cameras.

In another fascinating iteration of autonomous/visual navigation, Stevens researcher Philippos Mordohai — who specializes in the resolution of multiple images into cohesive, accurate 3D maps — mounts cameras on a motorized wheelchair to help patients with limited upper-body mobility navigate those wheelchairs more intelligently. The work has been supported by the National Institutes for Health (NIH).

Mordohai also recently received support from Google for another intriguing project: the development of better methods to power augmented reality (AR) systems and games such as Pokemon Go that need to continuously track the real 3D scenes and insert virtual objects in motion seamlessly into them.

Making waves at Microsoft, Adobe

Current faculty and students aren't the only ones probing the frontiers and promise of computer vision. Stevens faculty and alumni have also gone on to make a significant impact in the field elsewhere.

Leading computer vision researcher and former faculty member Gang Hua, for instance — who helped develop the wheelchair co-robot research eventually completed by Mordohai — published key research in 2015 while still at Stevens on the use of a type of AI known as a convolutional neural network to detect faces.

Hua later joined Microsoft to refine and apply those techniques further, then moved on to one of China's very largest AI ventures, where he continues to develop cutting-edge computer vision projects today.

"It is a great pleasure to see computer vision grow healthily at Stevens," says Hua now.

Doctoral recipient Haoxiang Li Ph.D. '16 is another alumnus who has made major contributions to face-recognition technology, such as helping to build Adobe Photoshop's proprietary ability to quickly and efficiently identify and organize individual faces in collections of images. Li's name already appears on five U.S. technology patents, with four more pending approval.

As Stevens' computer vision group grows and continues expanding its participation in major conferences and publishing in key journals, department chair Ateniese predicts further applications, enhancements and breakthroughs.

"We have a world-class team in computer vision developing here at the university," he says. "We will continue to attack new problems and create new approaches."