Stevens Institute of Technology researcher and Amazon veteran is teaching machines to make huge quantities of fuzzy data more comprehensible, actionable

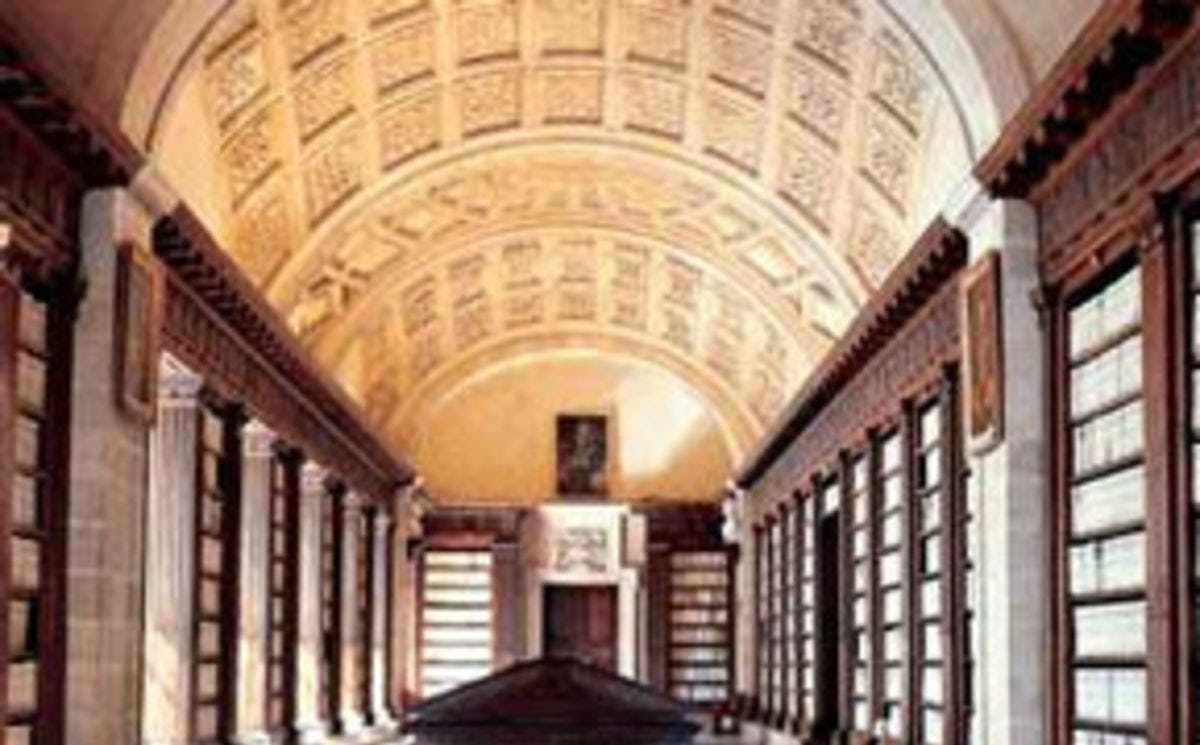

Lining the tiled halls of a modest 16th-century trading house in the center of Seville, Spain, some 88 million pages of original handwritten documents from the past three-and-a-half centuries lie in repository, partially transcribed, some of them nearly illegible. Some were carried back on armadas from the Americas. Some have been scanned and digitized; most have not.

The answers to — and context for — countless questions about European history, the Conquistadors, New World contact and colonialism, law, politics, social mores, economics and ancestry lie waiting within.

Unfortunately, very few of those carefully stored pages have ever been read or interpreted since they were written and carried to Seville centuries ago. Most, sadly, likely never will be.

But now a Stevens Institute of Technology researcher is trying to teach computers to read them, before time runs out, while the documents are still legible.

"What if a machine, a software, could transcribe them all?" asks Stevens computer science professor Fernando Perez-Cruz, an expert in the white-hot research area of machine learning. "And what if we could teach another machine to group those 88 million pages of searchable text into several hundred topics? Then we begin to understand themes in the material.

"We begin to know where to look in this repository of documents to begin to answer our questions."

Indeed, Perez-Cruz is working on both components of this two-pronged approach — an approach that, if he's right, could one day be applied to numerous other next-generation data analysis questions such as autonomous transport and medical data analysis.

Amazon pricing, medical research, text-mining machines

A veteran of Amazon, Princeton University, Bell Labs and University Carlos III of Madrid, Perez-Cruz has enjoyed an especially varied and interesting career tackling scientific challenges.

With Amazon, he worked on understanding profits and costs, developing tools that were later integrated into that company's technology. At Bell Labs, he tackled improved geo-localization of devices using cellular data and devised a machine learning algorithm to handle environmental imperfections. For psychologists, he has also investigated the application of interpretable machine learning models to the understanding of personality disorders using questionnaires.

Perez-Cruz joined Stevens in 2016, crediting the growing strength of the university's computer science department and its new chair, Giuseppe Ateniese, for his decisions.

"Giuseppe has a very strong reputation," notes Perez-Cruz. "He wants to make this a strong research department, one which is attracting more talent and more resources. I like the way Stevens is moving. It is moving in the right direction, and that's what I wanted to see."

Now, at Stevens, he works to develop what he calls 'interpretable machine learning': organized intelligence that humans must still act on.

With regard to the historical document-analysis problem, Perez-Cruz hopes first to develop much better character-recognition engines. By using short extracts of documents written in different styles, which have each previously been transcribed by human experts, he hopes to teach software to recognize both the shapes of characters and frequently-correlated relationships of letters and words to each other, building an increasingly accurate recognition engine over time.

"The question is, how much data, how much transcribed handwriting, is enough to do this well?" he asks. "And we just don't know yet. The work is still embryonic."

While that's a technical challenge, Perez-Cruz believes it may be solvable. He's even more interested in the next step: the organization of massive quantities of known transcribed data into usable topics at a glance.

"With, for example, these three-and-a-half centuries of data then transcribed, what stories can the machine tell you from this data right away?" he asks. "What information is contained there? What can the machine teach itself from the locations of the words and sentences? This is known as topic modeling."

Organizing big data into manageable topics: a key bridge

Once enough data is fed into the algorithm, it begins to detect the most significant identifying and organizing features and patterns in the data — very often different cues from the the cues human researchers guess are significant and search for.

"In the end, we might find that there are for example a few hundred subjects or narratives that run through this entire archive, and then I suddenly have an 88-million-document problem that I have summarized in 200 or 300 ideas," notes Perez-Cruz.

And if algorithms can organize 88 million pages of text into a few hundred buckets, says, Perez-Cruz, a huge leap in organization and efficiency has been made for the historians and researchers who need to make decisions about which specific documents, themes or time periods to search for, review and analyze in the previously unwieldy archive.

The same idea could work to find trends, themes and hidden meaning in other large unread databases such as raw medical data, he adds.

"You begin with a large quantity of unstructured data, and you want to bring structure to the data to understand what information that data contains and what decisions to make about it and with it," he concludes. "Once you understand the data, you can begin to read it in a specific manner, understand more clearly what questions to ask of it, and make better decisions."