Prepared for Anything: Stevens Professor Builds Secure Drones to Withstand Cyber–Physical Attacks

A new lab at Stevens will ensure that autonomous vehicles are safe and secure enough to protect human life

As technology advances, so do efforts to thwart it, with increasingly sophisticated cyberattacks costing victimized companies an average of $200,000 in damages per attack. Meanwhile, while we make huge investments in the development of unmanned vehicles, from delivery drones to passenger transportation to robotic surgery in space, the stakes of security have been upped: How do we protect these physical machines with computer components from malicious attacks—attacks that are not only financial threats, but threats to actual human life?

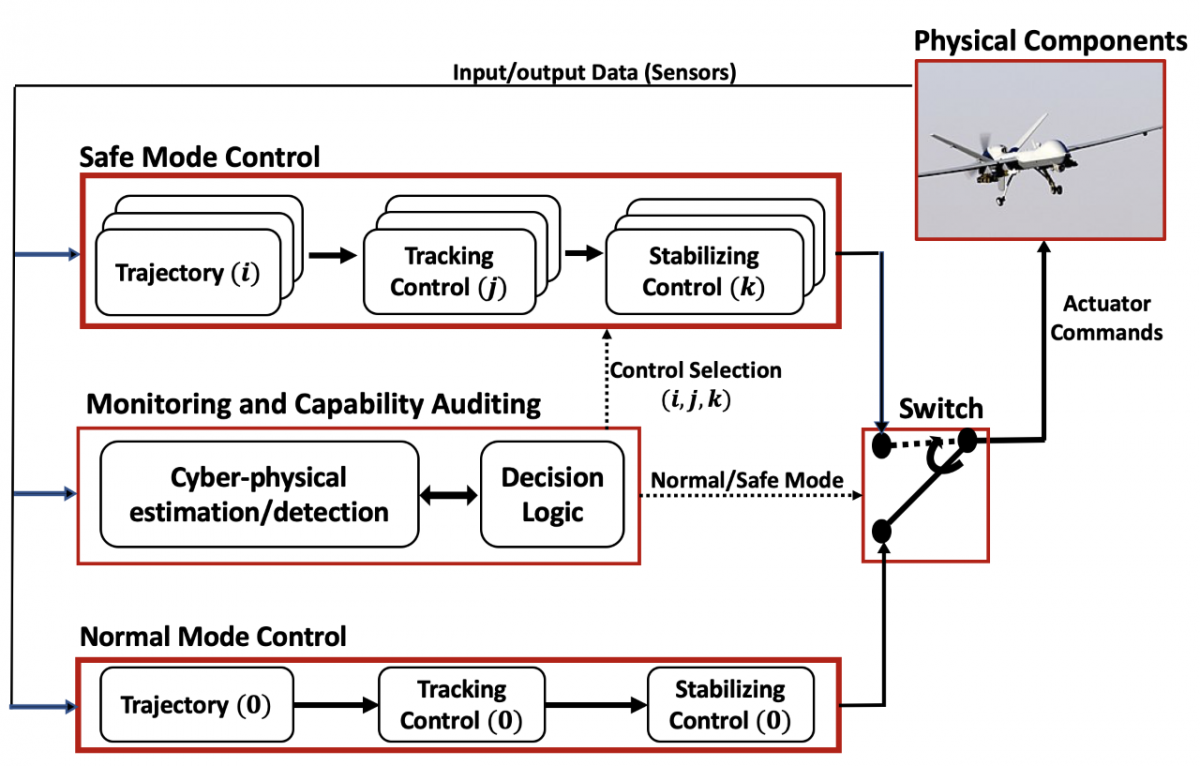

Hamid Jafarnejad Sani, assistant professor in the Department of Mechanical Engineering at Stevens Institute of Technology, is building smart systems with a series of checks and backups to prevent autonomous drones from failing in the face of inclement weather, software bugs, or cyberattacks. These drones, he hopes, will be prepared for anything—even the unpredictable and unknown. His new lab, the Safe Autonomous Systems Laboratory, will open later this year, seeking to answer the question, how can we make autonomous vehicles safer and more secure?

“Safety” and “security” are closely related, yet distinct, aspects of resiliency in this work. Security refers to incidents of deliberate attacks, while safety encompasses things like accidents and disturbances in the environment. Failures may be caused by unexpected scenarios such as bad weather—or deliberate attacks, such as hacking and terrorism.

This realm of research is known as cyber–physical security, a name that alludes to both the physical and software components of an autonomous vehicle. This field is naturally interdisciplinary, with university, government, and industry researchers at top-tier facilities expected to invest over $1 trillion on cybersecurity between 2017 and 2021, Jafernejad Sani explained.

Machine learning plays a fundamental role in designing these secure systems. Said Jafernejad Sani, “In navigation of autonomous systems, machine learning algorithms are used for object detection and localization using available sensor data such as images.”

He describes his research focus as “resilient and secure control in cyber–physical autonomous systems,” with applications as diverse as smart grids, financial systems, biomedical systems, and transportation networks—with each of these areas being susceptible to an increasing number of cyberattacks.

“The cyber–physical nature of autonomous systems makes them vulnerable to sophisticated attacks,” said Jafarnejad Sani. “Sensors, actuators, communication systems, and so forth can also be subject to malicious code injection. [Autonomous systems] are susceptible to terrorism and acts of war, which is different from pure cyberattacks, because those don’t typically involve loss of human life. In this sense, security is even more crucial.”

While engineers and computer scientists, and even psychologists, have been developing solutions to what is truly a life-and-death problem, there hasn’t been a unified approach to deal with both the physical and cyber aspects of these systems, Jafernejad Sani explained. “The first step in launching a cyberattack is social engineering, for example, sending phishing emails. Therefore, the social psychology of cyberattacks is important to study,” he said, continuing, “There is a need to bridge the gap between control and software verification communities for the better understanding of cyber–physical systems (CPS) security.”

Jafarnejad Sani’s lab, which is currently under construction, will address the full CPS of autonomous vehicles, beginning with drones. Before testing a physical drone, he and students will continue to develop computer simulations and algorithms to model the drone’s performance and reactions in a virtual environment. After extensive simulation testing, the actual drone is ready to be crafted. Housed in the lab will be an indoor flight arena equipped with a Vicon motion capture camera system and off-board computing workstations for design, test, and verification using aerial and ground robots. The lab will also enable investigations into stability protection, adaptation to uncertain conditions, and resilience to system failures.

“If we have multiple autonomous systems that are communicating, how can we mitigate stealthy attacks through better design?” asked Jafernejad Sani. "It is a challenging problem to defend against, even with the intervention of a human operator."

Creating a seamless relationship between the physical and cyber aspects of these autonomous systems is a complex order, but Jafarnejad Sani is up for the challenge.

“We develop control and estimation algorithms for the safe navigation of autonomous systems in the environment, subject to a wide range of uncertainties and failures,” he said. “We notice that the network and communications for a team of collaborative autonomous systems are susceptible to malicious cyberattacks. We develop resilient detection, isolation, and recovery algorithms for these collaborative systems under cyberattacks.”

“In dealing with complex CPS, we try to formulate problems that capture this issue in a systematic, rigorous way,” he continued.

While many grand challenges need to be dealt with before machine learning methods become practical in intelligent, safety-critical systems, Jafernejad Sani’s research aims to address safe and secure control of autonomous systems by integrating the tools from robust and adaptive control with machine learning and cyber–physical security methods.

“I am excited about the interdisciplinary aspect of this research, which lies at the intersection of control theory, artificial intelligence, and cyber–physical security, with a potential impact on safe autonomous systems of the future,” he said.